local LLM runners like Ollama, GPT4All, and LMStudio

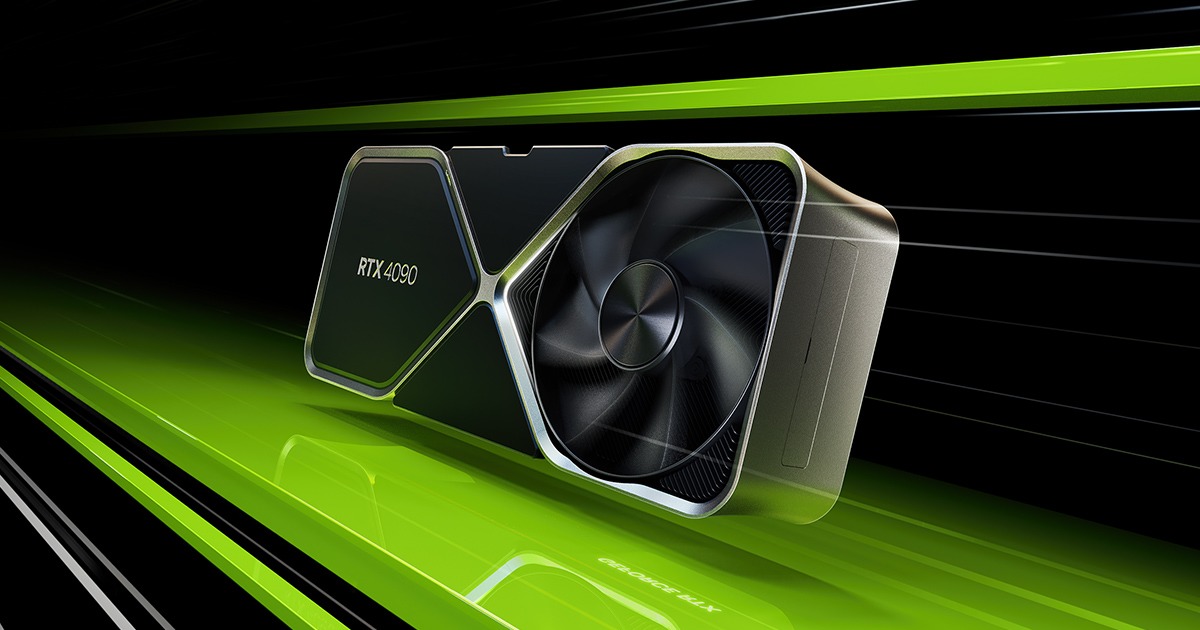

This is a guide for comparing local LLM runners like Ollama, GPT4All, and LMStudio for running models on an NVIDIA GeForce RTX 4090, here’s a breakdown of the options:

1. Ollama

• Pros:

• Excellent for macOS and Apple Silicon (M1/M2), but less optimized for NVIDIA GPUs.

• Focuses on a user-friendly interface and pre-configured models.

• Limited support for CUDA-based acceleration.

• Cons:

• No deep customization or optimization for high-end GPUs like the RTX 4090.

• Slower compared to other runners optimized for NVIDIA GPUs.

• Best For:

• Users with minimal technical experience who prioritize ease of use.

2. GPT4All

• Pros:

• Supports NVIDIA GPUs with CUDA acceleration.

• Works with a variety of quantized models (e.g., 4-bit, 8-bit).

• Lightweight and user-friendly, with CLI and GUI options.

• Supports LLaMA, Falcon, and GPT-J family models.

• Cons:

• Performance may not fully utilize the RTX 4090’s capabilities without further tuning.

• Lacks advanced features like memory optimization for massive models.

• Best For:

• Running small-to-medium-sized models efficiently on NVIDIA GPUs.

• Use cases that don’t require extensive fine-tuning or multi-GPU setups.

3. LMStudio

• Pros:

• Actively developed with GPU acceleration support.

• Works seamlessly with LLaMA-based models and others that support quantization.

• Great for running quantized models (4-bit/8-bit) that maximize performance on RTX 4090.

• Focus on a polished GUI for local usage.

• Cons:

• Still catching up to more mature frameworks in terms of advanced GPU optimization.

• Best For:

• Users who want a GUI runner optimized for NVIDIA GPUs without needing extensive CLI knowledge.

4. Best Alternatives for RTX 4090

If your focus is performance maximization on the RTX 4090, consider:

Text Generation WebUI

• Why it’s better:

• Fully utilizes NVIDIA GPUs with CUDA and memory-efficient loading for large models.

• Supports advanced features like LoRA and 4-bit quantization.

• Best For:

• Full control over model loading, especially for large LLaMA, Falcon, or GPT-NeoX models.

KoboldAI

• Why it’s better:

• Optimized for creative writing and general-purpose LLM tasks.

• GPU acceleration works well with NVIDIA GPUs.

• Best For:

• Creative tasks or interactive storytelling with local models.

ExLlama

• Why it’s better:

• Optimized for LLaMA models.

• Extremely fast and memory-efficient on high-end GPUs like the RTX 4090.

• Best For:

• Power users running LLaMA-based models at maximum speed.

Comparison: Ollama vs GPT4All vs LMStudio

Feature Ollama GPT4All LMStudio

Ease of Use ⭐⭐⭐⭐⭐ ⭐⭐⭐⭐ ⭐⭐⭐⭐

GPU Optimization ⭐⭐ ⭐⭐⭐⭐ ⭐⭐⭐⭐

Model Support Limited Wide Moderate

Quantization ❌ Yes (4/8-bit) Yes (4/8-bit)

Advanced Features ❌ Moderate Moderate

RTX 4090 Utilization Poor

Comments

Post a Comment